Revolutionize Business with Agentic AI

Agentic AI Driving Real-World ROI

Construction SaaS

Solution Focus:

Autonomous Multi-Agent Reporting (23-Language Support)

Key Outcome:

68% Faster task reporting. 86% Fewer manual errors.

security.

Digital Marketing

Solution Focus:

Gemini-Powered Conversational

Key Outcome:

80% Automated Responses.60% Drop in complex process abandonment.

Sustainable Construction

Solution Focus:

6-Agent Knowledge Assistant Autonomous Multi-Agent Reporting (23-Language Support)

Key Outcome:

Knowledge Access in Seconds. Zero Hallucination with guaranteed document citations.

.png)

.png)

Leverage Agentic AI to transform complex insights into autonomous, self-managing enterprise workflows.

Deep AI Expertise for

Transformative Impact

At Ankercloud, we deliver production grade agentic AI solutions that automate decisions, create content, and drive large scale business transformation with minimal human intervention.

Customised AI for Your Business

Custom AI solutions break down intricate challenges into structured, manageable tasks, leveraging historical data, context awareness, and real-time insights. These tailored AI systems plan intelligently, adapt dynamically, and deliver precise, creative, business-focused outcomes that empower smarter decision-making across the organization.

AI That Works Like a Teammate, Not a Tool

Custom-built AI workflows communicate naturally and collaborate seamlessly, enabling human-like interactions that streamline tasks. They automate information flow, reduce manual effort, and anticipate user needs through proactive assistance, boosting productivity without requiring constant supervision.

Automation That Turns Manual Tasks into Autopilot

Integrated with live data, enterprise systems, and APIs, custom AI automation autonomously executes tasks from retrieval to action. With built-in safeguards, it ensures accuracy, reliability, and operational integrity, delivering real-time insights and results that keep the business moving forward.

Core Technical Capabilities

At Ankercloud, we deliver production grade agentic AI solutions that automate decisions, create content, and drive large scale business transformation with minimal human intervention.

Power of Partnership: Ankercloud + Cloud Leaders

We integrate native AI and GenAI services from AWS, Google Cloud, and Microsoft Azure with Ankercloud’s proprietary expertise to deliver:

Secure & Compliant AI Architectures: Full-stack encryption, IAM, and compliance frameworks for GDPR, HIPAA, ISO 27001.

Scalable Cloud-Native Infrastructure: Autoscaling GPU clusters, high availability, disaster recovery, and cost-optimized consumption models.

Innovative GenAI Platforms: Solutions like VisionForge, combining GenAI with AWS infrastructure for large-scale enterprise content generation.

Tried & Tested Industry Use Cases

Our solutions are tested in real-world environments, driving measurable value across diverse sectors.

Manufacturing

Automated order entry, validation, and processing through multi-agent workflows, integrated with OCR and ERP systems for real-time accuracy.

Customer Support

Multi-agent AI systems handle ticket triage, respond to common queries, and escalate complex issues to the right teams, cutting response times and boosting service quality.

Healthcare & InsurTech

The system uses three agents working in sync: one extracts structured data from medical reports and claim documents, another automates claim checks by applying rules and flagging issues, and a third validates clinical terms, codes, and diagnoses for guideline compliance.

SaaS & EdTech

Recommendation engines, NLP driven conversational bots, and personalized content delivery.

Check out our blog

The Future of Autonomous Workflows: Agentic AI by Ankercloud

The Automation Paradox: Why Traditional ML is Hitting a Wall

Enterprises today are caught in a paradox: they need to innovate faster than ever, yet their core machine learning models require manual intervention, resulting in delays, inconsistent outcomes, and scalability challenges. Traditional AI is powerful, but it often requires fragmented processes and constant "babysitting", someone to manually shepherd data preparation, deployment, and monitoring. This operational friction turns promising AI projects into bottlenecks.

This is why Agentic AI represents a strategic necessity. It is the evolution from reactive data modeling to proactive, goal-driven automation. Agentic AI shifts the paradigm by acting autonomously, orchestrating complex workflows end-to-end to deliver results without constant human oversight. This allows businesses to accelerate innovation and unlock new sources of value that were previously unattainable.

Agentic AI: The New Paradigm of Goal-Driven Autonomy

Agentic AI is a new class of intelligent systems designed not just to process data, but to take initiative and complete high-level objectives. The key differentiator is autonomy:

- Autonomy in Action: Agentic AI can orchestrate the entire ML lifecycle, from identifying and preparing data to training models, deploying them, and ensuring continuous monitoring, making ML projects truly end-to-end and repeatable.

- Faster Delivery with Consistency: This automation allows our customers to scale their AI initiatives without the usual bottlenecks, achieving accelerated time-to-value and continuous enhancement with superior reliability.

This shift means your organization is no longer deploying static code; you are deploying intelligent systems that learn, adapt, and drive business goals forward.

The Collaborative Future: Agents, Protocols, and Speed

The true power of Agentic AI is its ability to break down silos and enable fluid collaboration, not just between humans and AI, but between multiple AI agents themselves.

- Seamless Connectivity: Agentic AI incorporates data and APIs from disparate sources, regardless of their location or format, into cohesive, orchestrated workflows.

- The Collaboration Layer: Ankercloud leverages emerging technologies like Model Context Protocol (MCP) and Agent to Agent Protocol (A2A) frameworks. These protocols enable multiple agents and sub-agents to collaborate much like a real-world problem-solving team, delivering smarter, fully automated workflows that address complex industry challenges with precision.

This connectivity enhances integration across all your enterprise systems, allowing us to deliver offerings previously out of reach, such as intelligent chatbots, complex data processing pipelines, and dynamic content generation tailored to customer needs.

Ankercloud: Your Architect for the Autonomous Enterprise

Transitioning to autonomous workflows requires more than just access to powerful models; it demands specialized expertise in cloud architecture, security, and continuous governance.

- Secure Cloud Expertise: As a premier partner of Google Cloud Platform (GCP) and AWS, Ankercloud brings unparalleled expertise. We integrate secure, reliable cloud-native managed services like Google Vertex AI Agents and AWS Bedrock Agents into our solutions. This approach guarantees high accuracy, robust performance, and regulatory compliance while minimizing operational overhead for our clients.

- Methodology and Trust: Agentic AI is a strategic enabler that drives substantial, measurable business outcomes. Paired with Ankercloud’s deep cloud security insights and mastery of the full ML lifecycle, customers gain confidence in adopting autonomous workflows that transform efficiency, boost quality, and increase agility across their operations.

Conclusion: The Future of Work is Autonomous

The competitive landscape of the future belongs to enterprises that can successfully implement goal-driven automation. Agentic AI is not just about technology; it’s about a new strategic methodology that frees your organization from manual, repetitive tasks, allowing your human talent to focus on innovation and high-value strategic initiatives.

Are you ready to unlock the potential of Agentic AI?

Partner with Ankercloud to begin your journey toward the autonomous future, where AI works smarter, faster, and safer for your enterprise.

The Rise of the Solo AI: Understanding How Intelligent Agents Operate Independently

The world of Artificial Intelligence is evolving at breakneck speed, and if you thought Generative AI was a game-changer, prepare yourself for the next frontier: Agentic AI. This isn't just about AI creating content or making predictions; it's about AI taking initiative, making decisions, and autonomously acting to achieve defined goals, all without constant human oversight. Welcome to a future where your digital workforce is not just smart, but truly agentic…

What exactly is Agentic AI? The Future of Autonomous Action

Think of traditional AI as a highly intelligent assistant waiting for your commands. Generative AI then empowered this assistant to create original content based on your prompts. Now, with Agentic AI, this assistant becomes a proactive, self-managing colleague or robot.

Agentic AI systems are characterized by their ability to:

- Autonomy: They can perform tasks independently, making decisions and executing actions without constant human intervention.

- Adaptability: They learn from interactions, feedback, and new data, continuously refining their strategies and decisions.

- Goal-Orientation: They are designed to achieve specific objectives, breaking down complex problems into manageable steps and seeing them through.

- Tool Integration: They can seamlessly interact with various software tools, databases, and APIs to gather information and execute tasks, much like a human would.

- Reasoning and Planning: Beyond simple rule-following, Agentic AI can reason about its environment, plan multi-step processes, and even recover from errors.

This evolution from reactive to proactive AI is not just a technological leap; it's a paradigm shift that promises to redefine how businesses operate. Gartner projects that by 2028, 33% of enterprise software applications will have integrated Agentic AI, a dramatic increase from less than 1% in 2024, highlighting its rapid adoption.

The Impact is Real: Why Agentic AI is a Trending Imperative

Businesses are no longer just experimenting with AI; they are investing heavily in it. A recent IBM study revealed that executives expect AI-enabled workflows to surge eightfold by the end of 2025, with Agentic AI at the core of this transformation. Why the urgency? Because the benefits are profound:

- Boosted Productivity & Efficiency: Imagine repetitive, time-consuming tasks being handled entirely by AI agents, freeing up your human workforce to focus on strategic initiatives and creative problem-solving.

- Enhanced Decision-Making: Agentic AI can analyze vast datasets in real-time, identify patterns, and provide actionable insights, leading to more informed and proactive business decisions.

- Cost Reduction: Automating complex processes and optimizing resource allocation directly translates into significant cost savings.

- Unlocking New Revenue Streams: By automating customer interactions, personalizing experiences, and optimizing operations, Agentic AI can directly contribute to increased sales and market expansion.

- Improved Employee and Customer Experience: From streamlined internal workflows to hyper-personalized customer service, Agentic AI elevates interactions across the board.

- Competitive Advantage: Early adopters of Agentic AI are already seeing a distinct edge in their respective markets, setting new standards for innovation and operational excellence.

Top Use Cases: Where Agentic AI Shines Brightest

The applications of Agentic AI are vast and growing across every industry. Here are some of the top use cases where it's already making a significant impact:

- Smart Manufacturing

- Predictive Maintenance & Quality Control: Agentic AI monitors equipment in real time, predicts failures, and schedules maintenance to prevent unplanned downtime while also using computer vision to detect product defects and reduce waste by up to 60%.

- Autonomous Inventory & Supply Chain Optimization: AI agents track inventory levels, forecast demand, and optimize supply chain logistics to avoid stockouts or overstocking, dynamically adjusting to market changes and disruptions for cost efficiency and seamless operations.

- Smart Robots

- Dynamic Task Allocation & Autonomous Assembly: Agentic AI enables robots to adapt to new tasks and environments in real time, optimizing assembly processes and resource usage for faster, more flexible production with minimal human intervention.

- Collaborative Robotics (Cobots) & Real-Time Monitoring: AI-powered robots work safely alongside humans, adjusting behaviors based on real-time conditions, and continuously monitor production lines to detect anomalies and ensure quality and safety.

- Customer Service & Engagement:

- Autonomous Support Agents: Beyond traditional chatbots, agentic AI can independently resolve complex customer inquiries, access and analyze live data, offer tailored solutions (e.g., refunds, expedited orders), and update records.

- Personalized Customer Journeys: Anticipating customer needs and preferences, agentic AI can proactively offer relevant products, services, and support, enhancing satisfaction and loyalty.

- Finance & Fraud Detection:

- Automated Trading: Analyzing market data and executing trades autonomously to optimize investment decisions.

- Enhanced Fraud Detection: Proactively identifying and flagging suspicious patterns in transactions and user behavior to mitigate financial risks.

- Software Development & IT Operations (DevOps):

- Automated Code Generation & Testing: AI agents can generate code segments, provide real-time suggestions, and automate software testing, accelerating development cycles.

- Proactive System Monitoring & Maintenance: Continuously scanning for anomalies, triggering automated responses to contain threats, and scheduling predictive maintenance.

- Human Resources (HR):

- Automated Recruitment: From screening resumes and scheduling interviews to simulating interview experiences for candidates.

- Personalized Onboarding: Tailoring onboarding sessions and providing relevant information to new hires.

Ankercloud's Agentic AI Solutions: Your Partner in the Autonomous Future

At Ankercloud, we don't just talk about Agentic AI; we build and deploy real-world solutions that deliver tangible business value. We combine cutting-edge technology with our deep industry expertise to help you navigate the complexities of this new frontier.

Our approach to Agentic AI is rooted in a fundamental understanding of your business needs. We work closely with you to:

- Analyze Existing Workflows: We identify opportunities where Agentic AI can significantly enhance efficiency and outcomes.

- Integrate Human-in-the-Loop Solutions: Our solutions are designed to augment, not replace, your human workforce, ensuring critical oversight and collaboration.

- Seamless Integration: We design AI agents that integrate effortlessly with your existing systems (ERPs, CRMs, finance tools) to enhance workflows without disruption.

- Custom GenAI Models: We develop bespoke Agentic AI models tailored to your specific business goals, leveraging the power of Generative AI for advanced reasoning and content generation.

- Industry-Specific Expertise: Our experience spans diverse industries, allowing us to build solutions that address your unique challenges and opportunities.

- Robust Governance and Security: We embed ethical guardrails, robust security protocols, and explainable AI capabilities from the outset, ensuring responsible and trustworthy autonomous operations.

The future of business is autonomous, adaptive, and intelligent. Agentic AI is no longer a concept; it's a tangible reality that is reshaping industries and creating new opportunities for growth.

Are you ready to unlock the full potential of Agentic AI for your business?

Contact Ankercloud today to explore how our Agentic AI solutions can transform your operations and propel you into the autonomous future.

Supercharge Your AI Systems with Gemini 1.5: Advanced Features & Techniques

In the ever-competitive race to build faster, smarter, and more aware LLMs, every new month has a major announcement of a new family of models. And since the Gemini 1.5 family, consisting of Nano, Flash, Pro, and Ultra have been out since May 2024, many developers have already had their chance to work with them. And any of those developers can tell you one thing: Gemini 1.5 isn’t just a step ahead. It’s a great big leap forward.

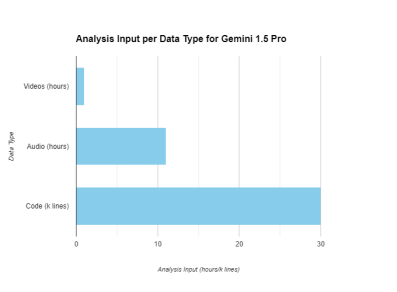

Gemini is an extremely versatile and functional model, it comes equipped with a 2 million input context window. This means that prompts can be massive. And one may think that this will cause performance issues in the model’s outputs, but Gemini consistently delivers accurate, compliant, and context-aware responses, no matter how long the prompt is. And the lengths of the prompts mean that users can push large data files along with the prompt. The 2 million token input window equates to 1 hour of videos, 11 hours of audio, and 30k lines of code to be analyzed at once through Gemini 1.5 pro.

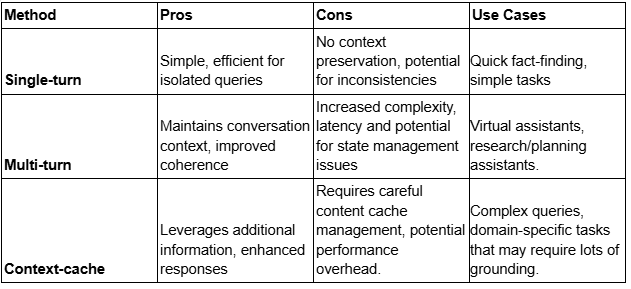

With all that being said, deciding which approach to take towards building a conversational system using Gemini 1.5 varies greatly depending on what the developer is trying to achieve.

All the methods require a general set-up procedure:

1. Google Cloud Project: A GCP project with the Vertex AI API enabled to access the Gemini model.

2. Python Environment: A Python environment with the necessary libraries (vertexai, google-cloud-aiplatform) installed.

3. Authentication: Proper authentication setup, which includes creating a service account with access to the VertexAI platform: roles such as VertexAI User. Then, a key needs to be downloaded to the application environment. This service account needs to be set as the default application credentials in the environment:

Python

import os

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "path/to/service/account/key"

4. Model Selection: Choosing the appropriate Gemini model variant (Nano, Flash, Pro, Ultra) based on your specific needs.

Gemini models can be accessed through the Python SDK, VertexAI, or through LLM frameworks such as Langchain. However, for this piece, we will stick to using the Python SDK, which can be installed using:

Unset

pip install vertexai

pip install google-cloud-aiplatform

To access Gemini through this method, the VertexAI API must be enabled on a google cloud project. The details of this project need to be instantiated, so that Gemini can be accessed through the client SDK.

Python

vertexai.init(project="project_id", location="project_location")

From here, to access the model and begin prompting, an instance of the model needs to be imported from the GenerativeModel module in vertexai. This can be done by:

Python

from vertexai.generative_models import GenerativeModel, ChatSession, Part model_object = GenerativeModel(model_name="gemini-1.5-flash-001", system_instruction="You are a helpful assistant who

reads documents and answers questions.")

From here, we are ready to start prompting our conversational system. But, as Gemini is so versatile, there are many methods to interact with the model, depending on the use case. In this piece, I will cover the three main methods, and the ones I see as the most practical for building architectures:

Chat Session Interaction

VertexAI generative models are able to store their own history while the instance is open. This means that no external service is needed to store the conversation history. This history can be saved, exported, and further modified. Now, the history object comes as an attribute to the ChatSession object that we imported earlier. This ChatSession object can be instantiated by:

Python

chat = ChatSession(model=model_object)

Further attributes can be added, including the aforementioned chat history which allows the model to have a simulated history to continue the conversation from. This chat session object is the interface between the user and the model. When a new prompt needs to be pushed to the ChatSession, that can be accomplished by using:

Python

chat.send_message(prompt, stream=False )

This method simulates a multi-turn chat session, where context is preserved throughout the whole conversation, until the instance of the chat session is active. The history is maintained by the chat session object, which allows the user to ask more antecedent driven questions, such as “What did you mean by that?”, “How can I improve this?”, where the object isn’t clear. This chat session method is ideal for chat interface scenarios, such as chatbots, personal assistants and more.

The history created by the model can be saved later and reloaded, during the instantiation of the chat session model, like so:

Python

messages = chat.history

chat = ChatSession(model=model, history=history)

And from here, the chat session can be continued in the multiturn chat format. This method is very straightforward and eliminates the need to have external frameworks such as Langchain to manage conversations, and load them back into the model. This method maintains the full functionality of the Gemini models while minimizing the overhead required to have a full chat interface.

Single Turn Chat Method

If the functionality required doesn’t call for a multi turn chat methodology, or there isn’t a need for history to be saved, the Gemini SDK includes methods that work as a single turn chat method, similar to how image generation interfaces work. Each

call to the model acts as an independent session with no session tracking, or knowledge of past conversations. This reduces the overhead required to create the interface while still having a fully functional solution.

For this method, the model object can be used directly, like so:

Python

model = genai.GenerativeModel("gemini-1.5-flash")

response = model.generate_content("What is the capital of Karnataka?") print(response.text)

Here, a message is pushed to the model directly without any history or context added to the prompt. This method is beneficial for any use case that only requires a single turn messaging, for example grammar correction, some basic suggestions, and more.

Context Caching

Typically, LLMs aren’t used on their own, they are grounded with a source of truth. This allows them to have a knowledge bank when they answer questions, which reduces the chances that they hallucinate, or get information wrong. This is accomplished by using a RAG system. However, Gemini 1.5 makes that process unnecessary. Of course, one could just push the extracted text of a document completely into the prompt, but if a cache of documents is too large –33,000 tokens or larger– VertexAI has a method for that: Context Caching.

Context caching works by pushing a whole load of documents along with the model into a variable, and initiating a Chat Session from this cache. This eliminates the need to create a rag pipeline, as the model has the documentation to refer to on demand.

Python

contents = [

Part.from_uri(

"gs://cloud-samples-data/generative-ai/pdf/2312.11805v3.pdf",

mime_type="application/pdf",

),

Part.from_uri(

"gs://cloud-samples-data/generative-ai/pdf/2403.05530.pdf",

mime_type="application/pdf",

),

]

cached_content = caching.CachedContent.create(

model_name="gemini-1.5-pro-001",

system_instruction=system_instruction,

contents=contents,

ttl=datetime.timedelta(minutes=60),

)

The cached documents can be sourced from any GCP storage location, and are formatted into a Part object. These Part objects are essentially a data type that make up the multi turn chat formats. These objects are collated along with the prompt into a list and pushed to the Cache object. Along with the contents, a time to live parameter is also expected. This gives an expiration time for the cache, which aids in security and memory management.

Now, to use this cache, a model needs to be created from the cache variable. This can be done through:

Python

model = GenerativeModel.from_cached_content(cached_content=cached_content) response = model.generate_content("What are the papers about?")

#alternatively, a ChatSession object can be used with this model to create a multiturn chat interface.

The key advantage of the Gemini 1.5 family of models is that they have unparalleled support for multimodal prompts. The Part objects can be used to encode all types of inputs. By adjusting the mime-type parameter, the part object can represent any type of input, including audio, video, and images. Whatever the input type, the object can be appended to the input list, and the model will interpret it exactly as you need it to.

And voila! You’ve made your very own multimodal AI assistant using Gemini 1.5. Note that this is just a jumping off point. The VertexAI SDK has functionality that supports building complex Agentic systems, image generation, model tuning and more. The scope of Google’s foundational models and the surrounding support structure is ever-growing, and gives seasoned developers and newbies alike unprecedented power to build groundbreaking, superbly effective, and responsible applications.

Ankercloud: Partners with AWS, GCP, and Azure

We excel through partnerships with industry giants like AWS, GCP, and Azure, offering innovative solutions backed by leading cloud technologies.

The Ankercloud Team loves to listen

.png)

.jpg)